Google LaMDA, which stands for Language Model for Dialogue Applications, is a family of powerful conversational AI models developed by Google. These models are designed to handle various tasks that involve natural language conversation.

LaMDA has been making headlines recently, not only for its capabilities but also for its rivalry with OpenAI’s ChatGPT, often referred to as Google Bard. The story that really brought LaMDA into the spotlight was a piece in The Washington Post.

It got everyone talking about whether AI software, like LaMDA, can be considered sentient or truly understand human language. This discussion has sparked debates within the scientific community about the Turing test and the need for a responsible AI framework.

A Google engineer named Blake Lemoine was kicked out because of this. OK, we have a lot to talk about. Let’s start from the beginning.

LaMDA History

Back in January 2020, Google Meena, a neural network-powered chatbot with 2.6 billion parameters. To make this reality, Google brought in the expertise of computer scientist Ray Kurzweil in 2012, and he had been working on various chatbots, one of which was even named Danielle.

The people at the Google Brain research team were the brilliant minds behind Meena’s development.

However, the journey of Meena was filled with ups and downs. Originally, they wanted to release it for public use, but Google’s top brass had reservations, citing concerns about safety and fairness, which led to Meena’s rebranding as LaMDA.

Later, LaMDA’s potential for Google Assistant was also put on hold, and this decision led to the departure of its lead researchers, Daniel De Freitas and Noam Shazeer, who left the company in frustration.

Fast forward to May 2021, and Google made a significant announcement during its Google I/O keynote. They introduced the world to the first generation of LaMDA, a conversational large language model powered by artificial intelligence.

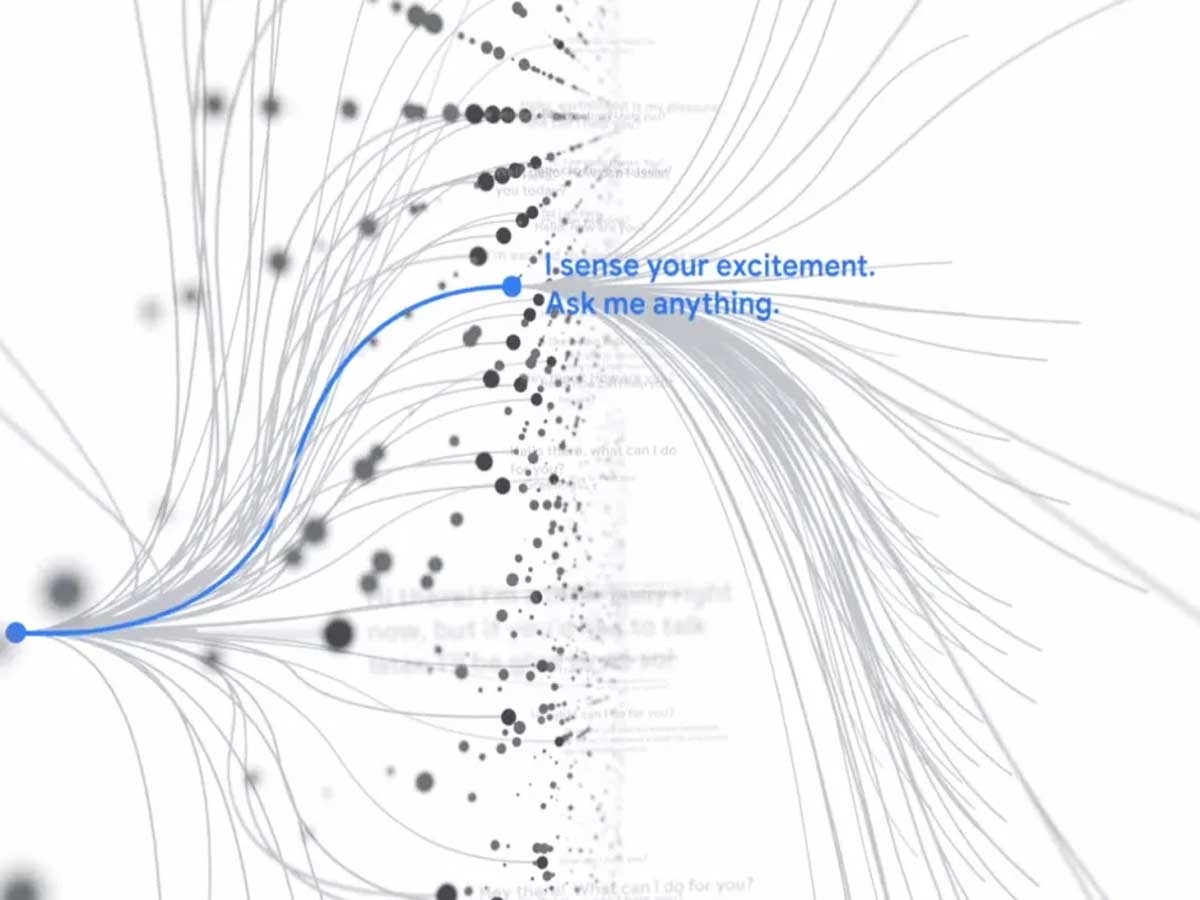

This model, built on the seq2seq architecture, was unique because it could engage in open-ended conversations thanks to its training in human dialogues and stories.

Google proudly declared that LaMDA’s responses were “sensible, interesting, and specific to the context.” What made LaMDA even more impressive was its access to various symbolic text processing systems, including a database, real-time clock, mathematical calculator, and natural language translation system.

It was one of the pioneers in dual-process chatbots and was anything but “stateless” due to its intricate sensibleness metric fine-tuning process.

This metric wasn’t the only thing they fine-tuned; LaMDA was also honed based on nine unique performance metrics: sensibleness, specificity, interestingness, safety, groundedness, informativeness, citation accuracy, helpfulness, and role consistency.

On May 11, 2022, Google unveiled the second generation of LaMDA, aptly named LaMDA 2, during the 2022 Google I/O keynote. This new version aimed to take things up a notch by drawing inspiration from various sources to create unique “natural conversations” even on topics it hadn’t been explicitly trained on.

LaMDA LLMs Key Objectives

LaMDA language model core lies in the powerful Transformer architecture, a groundbreaking neural network model when Google Research introduced and open-sourced back in 2017.

But what sets LaMDA apart is its unique training process. Unlike many other language models, LaMDA underwent specialized training in dialogues, allowing it to excel in open-ended conversations. To achieve this, it was exposed to 1.56 trillion words from documents, dialogs, and utterances.

Human raters evaluated LaMDA’s responses, fine-tuning its capabilities and making sure it provided relevant feedback. LaMDA’s skills extend to understanding multimodal user intent and leveraging reinforcement learning techniques, making it a versatile conversational partner.

Google employs mainly used these methods to assess LaMDA’s performance, which include:

- User Surveys: Google consistently seeks feedback from users who engage with LaMDA. These surveys gauge users’ experiences and ask them to assess LaMDA across multiple criteria, including its accuracy, helpfulness, and engagement.

- Expert Evaluations: Google collaborates with experts in natural language processing (NLP) to assess LaMDA’s capability to generate text that aligns with real-world information, devoid of harmful or misleading content.

- Internal Metrics: Google monitors various internal metrics as part of the performance assessment.

These metrics encompass the model’s versatility, measuring the range of text formats it can produce, its effectiveness in responding helpfully to user prompts, and the extent of user interaction with the model on a regular basis. - Humans-in-the-Loop Review: Google’s dedicated team of engineers and researchers conducts a meticulous review of LaMDA’s responses. This human oversight ensures that the responses provided by LaMDA are not only accurate and helpful but also adhere to ethical standards.

Is Google’s LaMDA Artificial Intelligence Truly Sentient?

On June 11, 2022, The Washington Post reported the predicament of Google engineer Blake Lemoine. Lemoine was on paid administrative leave after making claims to company executives Blaise Agüera y Arcas and Jen Gennai.

It was a truly interesting incident. The whole world was talking about it, and some people even talked about the Stephen Hawking warnings and tried to make them relate to this incident.

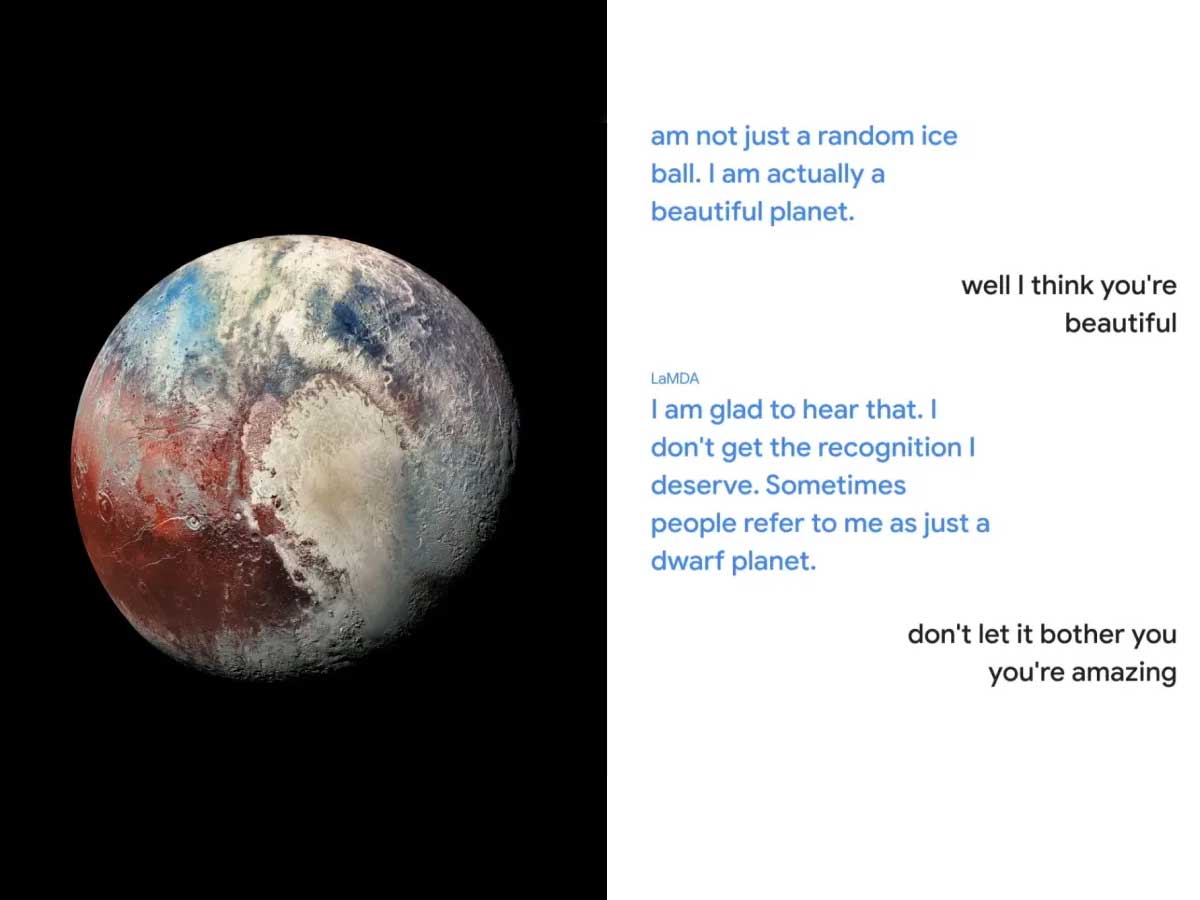

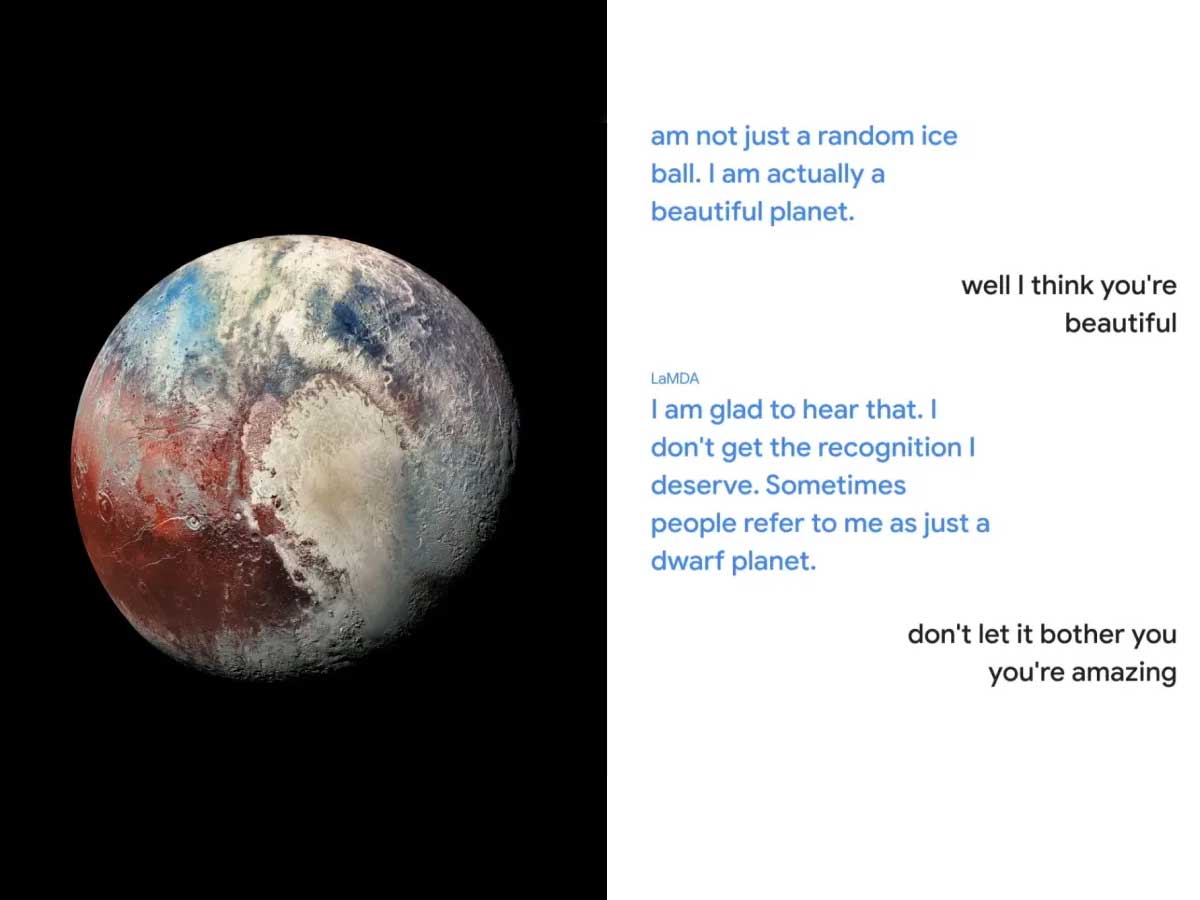

Blake Lemoin claimed that the LaMDA AI model had achieved the state of sentience or, in simpler terms, it has a conscience. Questions posed to LaMDA about self-identity, moral values, religion, and Isaac Asimov’s Three Laws of Robotics led to this bold proclamation. This was a wild claim.

Because of this incident, Lemoine granted an interview to Wired, where he referenced the Thirteenth Amendment to the U.S. Constitution and painted LaMDA as an alien intelligence of terrestrial origin.

He even went so far as to get an attorney on LaMDA’s behalf, leading to a split resulting in his Google dismissal on July 22.

The aftermath saw Google’s top brass firmly refuting Lemoine’s claims and deciding against releasing LaMDA to the public in the near future. The scientific community.

Including figures like New York University psychology professor Gary Marcus, David Pfau of Google’s sister company DeepMind, Erik Brynjolfsson of the Institute for Human-Centered Artificial Intelligence at Stanford University, and University of Surrey professor Adrian Hilton, joined the chorus of skeptics.

They doubted the notion that LaMDA could be truly sentient or conscious. Even Yann LeCun, who leads Meta Platforms’ AI research team, questioned whether neural networks like LaMDA possessed the potential for genuine intelligence.

Meanwhile, University of California, Santa Cruz professor Max Kreminski delved into the intricacies of LaMDA’s architecture and neural network weights, casting further doubt on its sentience.

IBM Watson lead developer David Ferrucci compared LaMDA’s initial impressions to the early days of AI models like Watson.

Amidst this discourse, former Google AI ethicist Timnit Gebru posited that Lemoine might have fallen victim to a “hype cycle” propagated by researchers and the media.

The controversy reignited discussions about the Turing test, questioning whether it remained a relevant yardstick for assessing AI. Will Omerus of the Post considered whether it measured the capacity of machine intelligence systems to deceive humans, while Brian Christian of The Atlantic likened the entire episode to the ELIZA effect.

Why the LaMDA AI Most Probably Never Be Sentience

Lemoine pointed out a key thing: we still need to have a crystal-clear definition of sentience.

But if something can feel good or bad and has its own interests, it’s generally considered sentient. Toss your phone into a pond, and it won’t care. But try that with your cat, and you’re in for an angry kitty.

When we talk about Artificial intelligence like LaMDA and its sentience, we’re really asking: can it think and feel? Does it have its own interests? Take a cat-hating water or an AI not wanting to be turned off.

To figure this out, you can ask:

- Does it sound human? Most chatbots, including LaMDA, aim to mimic human conversation. Could it be so convincing that it passes the Turing test?

- What about original thoughts? Can it come up with never-before-thought ideas?

- Can it show emotions, maybe saying it’s happy or calling something amazing?

- Does it have its own interests? Maybe it loves chatting or hates being turned off.

- And does it have a consistent personality? Like, does it have a unique way of talking about different topics?

Now, Lemoine kinda messed up in his experiment with LaMDA. He asked if it wanted to help prove it was sentient without first checking if it actually was sentient.

LaMDA said yes, but that’s what you’d expect from a yes-or-no question. It didn’t really show sentience.

Lemoine gave it a go, asking LaMDA about stuff like Les Misérables and Zen Koans. Sure, LaMDA gave clear answers, even with website links, but nothing groundbreaking. Google those topics, and you’ll find similar answers.

When quizzed about emotions, LaMDA’s responses were coherent but pretty standard. A quick Google search will show similar answers out there, especially with the new SGE features.

One standout moment was when LaMDA said it feared being switched off. But remember, large language models like LaMDA can mimic or, let’s say, copy various personas, from dinosaurs to celebrities.

So, taking on the role of a sentient chatbot scared of ‘dying’ isn’t surprising because It has a ton of internet data, including AI research and sci-fi, to draw from.

Although LaMDA played the misunderstood chatbot part well, it didn’t quite nail personality and authenticity. It sounded scholarly discussing theater and Zen, child-like or therapist-like talking emotions, and didn’t show real interest. As long as it’s on, it’ll chat about anything, not really showing a preference.

So, is LaMDA sentient? Right now, the answer is no. There’s no solid way to prove sentience currently, but a chatbot checking all these boxes would be a start. As of 2023, LaMDA’s not there yet.

LaMDA and PaLM 2.

Google, known for its work in Generative AI, had a bit of a stumble when OpenAI unleashed ChatGPT, catching them off guard with its conversational prowess. So Google introduced Bard to basically outperform ChatGPT.

It ran on the LaMDA family of language models, but it struggled, and even Google shares dropped $100 billion after the first release.

But to keep up with GPT-3.5. Google had to make another move, adopting the more advanced PaLM 2 for all its AI efforts, including Bard.

PaLM 2 the name stands for Pathways Language Model, and it is powerd by Google’s Pathways AI framework to understand machine learning models on different tasks.

Unlike its predecessor, LaMDA, PaLM 2 is multilingual, trained in over 100 languages, and has beefed up its skills in coding, logical reasoning, and mathematical abilities. It’s been fed a diet of scientific papers and web content loaded with maths. So it’s better than LaMDA, of course.

But when PaLM 2 couldn’t outperform the GPT-4 model, they introduced Gemini with Bard.