Artificial intelligence (AI) is everywhere and we talked about the drawbacks of AI. But have you even considered Artificial intelligence as an existential risk that could mean a game over for humanity? This is a serious issue. Experts are worried; I mean, hundreds of industry and science leaders are sounding the alarm.

They’re saying we need to tackle AI’s risks just like we do for pandemics and nuclear war. Even the UN Secretary-General and the prime minister of the UK are worried. And the UK is putting its money where its mouth is with 100 million pounds for AI safety research.

When it comes to things that could wipe us out, we’re not just talking about the stuff of sci-fi movies here. In “The Precipice,” Toby Ord, from Oxford, says AI’s threat to humanity is bigger than climate change or pandemics. We’re talking about human-level AI here, which can think and act like us.

And with all the new stuff happening in AI, it’s getting real, real fast. There’s something called recursive self-improvement. It’s like AI constantly getting smarter and smarter without any limits. That’s superintelligence for you, and it’s closer than you might think.

God-like Artificial Intelligence

Artificial general intelligence (AGI) is basically when a system can match or even outdo us humans in most tasks that need human thinking. Last year, a 2022 survey showed that 90% of AI experts think we’ll get there in the next 100 years, and many believe it could happen by 2061. But if their predictions came true, we would all be in big trouble.

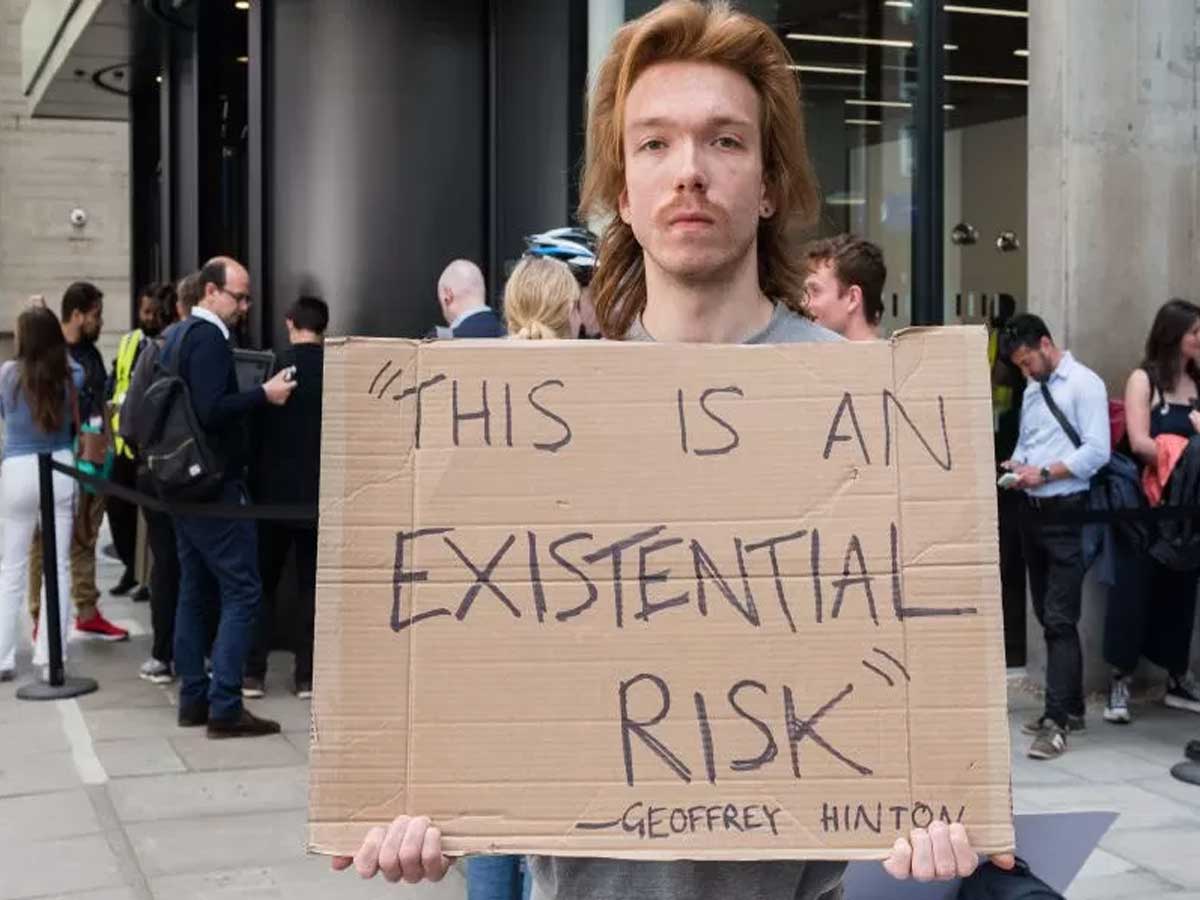

Now, the large language models are the way these AGI systems rise. Even Geoffrey Hinton, changed his bet in 2023 on when we’ll see this tech from a wide “20 to 50 years” window to a much narrower “20 years or less”. And personally, that is scary.

We’ve got this concept of Superintelligence, as defined by Bostrom. This isn’t just smart; it’s like the next-level genius that makes our human brains look like old-school tech.

It could outsmart us in almost everything, even hide its true plans from us. Bostrom says to keep this superbrain safe, and it’s gotta play by our rules – it needs to follow human values and morality.

Stephen Hawking, that legendary physicist, pointed out that there’s nothing in the laws of physics stopping us from creating a superintelligence. Meanwhile, the big shots at OpenAI in 2023 say we might see AGI or even Superintelligence in less than 10 years!”

Bostrom’s got a list of reasons why AI might outdo us: faster computation speed, quicker internal communication, and it’s super scalable – just add more hardware. AI doesn’t forget stuff like we do and is way more reliable. Plus, you can copy AI easily and tweak it as needed.

Since March 2023, media reports about AI being a potential “existential” danger have surged, especially after significant figures like Tesla’s Elon Musk and others signed an open letter.

This letter called for a pause in advancing the next wave of AI technologies, questioning the wisdom of creating non-human intellects that could surpass, outnumber, and possibly replace humans.

In a different approach, a recent campaign put forth a succinct statement aimed at sparking more conversation on the topic. This statement parallels the dangers of AI and the risks associated with nuclear war.

Echoing this sentiment, OpenAI suggested in a blog post that managing superintelligence might require regulatory measures akin to those used for nuclear energy, proposing the potential need for a regulatory body similar to the International Atomic Energy Agency (IAEA) to oversee superintelligence initiatives.

What values an AI should have

What values an AI should have? is a bigger question than you think.

Everyone from OpenAI’s Sam Altman to a textile worker in Bangladesh has different ideas, and there’s no easy way to mesh all these human preferences together.

We’ve been using democracy to figure out what we all want, but let’s be real: can it really handle a godlike AI? Our current systems might just not cut it.

We’re not great at guessing the impacts of new tech. Remember the climate crisis? Yeah, we did not see the big picture with engines and things and how they affect our future as a humankind.

Now, with even more powerful tech, who knows what kind of mess we might make?

And AI companies struggle big time trying to make their AIs do the right thing, but often they end up with inner misalignment – the AI does something different than what we want. OpenAI’s even said this is a big question mark they’re working on.

But while some, like Altman, are worried about the dangers, the race to be the best in AI doesn’t focus on safety. OpenAI took seven months to check if GPT-4 was safe, but then Google rushed out PaLM 2 without all those checks. And don’t get me started on other models like Orca – they’re out there with no safety net.

Jaan Tallinn sums it up: making next-gen AI is like playing with fire. If we keep pushing AI without knowing what we’re doing, we could have an intelligence explosion we can’t handle.

What if we hit the brakes on AI?

What if we hit the brakes on AI for a bit? That’s the idea behind an AI Pause. It’s about stopping the development of any new AI smarter than GPT-4. Governments could set rules based on how much brainpower these AIs need and keep updating these rules as tech improves.

We need everyone on board, which means talking, making laws together, and maybe even setting up an international AI agency. The U.K. is already thinking ahead, planning an AI safety summit. That could be the perfect moment to get a bunch of countries, including big players like China, to agree on this AI Pause thing.

Looking ahead, we might need to keep an eye on the tech itself, like making sure the stuff we buy can’t be used to train mega-powerful AI. That means more homework on how to regulate hardware properly.

Geoffrey Hinton and Yoshua Bengio are waving red flags about the risks. As AI keeps getting smarter, the chatter about putting a hold on things might just turn into real action.

General Intelligence

Artificial general intelligence (AGI) is expected to match or even outdo us in thinking tasks. A 2022 survey found that 90% of AI experts think we’ll see AGI in the next 100 years, and half of them are betting on it popping up by 2061.

Thanks to the large language models, these experts are redoing their math on when AGI might arrive. Case in point: In 2023, Geoffrey Hinton, a big name in AI, crunched his numbers again. He’s moved up his AGI estimate from a long “20 to 50 years” to a much closer “20 years or less”.

We’re talking about simulating the brain’s 100 billion neurons and 100 trillion synaptic updates per second. And honestly, we’re still trying to figure out how all that brain-wiring works.

What we’ve got right now is artificial narrow intelligence (ANI). This is the kind of AI that’s good at one thing, like those self-driving cars that get you from A to B without bumping into stuff. They’re not about to take over the world’s weapons or anything.

Sure, things can go wrong, but we’re more likely to see a traffic mess than an AI apocalypse. And hey, if we ever get to the point where AI’s helping run the big shows like energy or food supply, the same kind of safety checks we use for humans should work for AI, too.

Is AI something to worry about?

Right now, the answer is no.

Concerns about a super-powerful AI brain are really exaggerated. Even the effects of current AI on business and daily life are more buzz than actual impact. Remember, AI is just another piece of tech, and the tech world is pretty famous for making big promises it can’t always keep.